Artificial Intelligence of Robot – Dangerous

Newspapers have revealed that it seems that artificial intelligence – AI tends to be out to get us. The Mirror had stated that `Robot intelligence is dangerous’ – experts have cautioned after Facebook artificial intelligence develop their own language. Facebook had shut off artificial intelligence experiment when two robots had begun speaking in their own language which only they could comprehend and experts have called the incident exhilarating though also creepy.

The chatbots had revised English in making it easier for them to communicate, creating sentences which sounded prattle to the watching scientists. Identical stories also seem to show up in the Sun, the Independent, and the Telegraph as well as in various other publications. Facebook had published a blog post, sometime in June regarding an interesting research on chatbot programs that had short, text-based chats with humans or other bots.

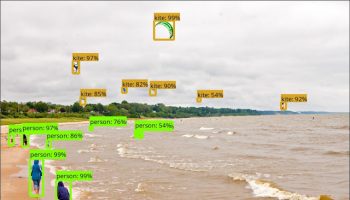

The story had been covered by New Scientist together with the others at that point of time. Facebook had been investigating with bots which had negotiated with each other regarding the ownership of computer-generated items. It was a struggle to comprehend how linguistic tends to play a role with such discussions played for negotiating parties as well as crucially the bot had been programmed to try-out with language to see how that could affect their control in the discussion.

Bot Chatting in Imitative Shorthand

Some coverage had picked up on the fact that in a few cases the exchanges had been initially – absurd, a few days thereafter. Though some of the reports imply that the bots at this point of time had developed a new language for the purpose of evading their human masters, an improved explanation is that the neural networks were just attempting to modify human language for more successful interactions, whether their approach seemed to work or not was another issue.

As Gizmodo, the technology news site had mentioned that `in their attempt in learning from each other, the bot thus started chatting back and forth in a imitative shorthand though while it would seem creepy, that’s all it was’. Artificial intelligence which tends to rework English as we are aware of, in order to compute a task better, are not new. Google had informed that the translation software had been done at the time of development.

It had mentioned in a blog that the network needs to be encoding something about the semantics of the sentence. Earlier in the year, Wired had had reported on a researcher at OpenAI who had been working on a system wherein AIs devise their own language, refining their ability in order to process information rapidly and thereby confront complex issues much more efficiently.

Cultural Uncertainties

The Artificial intelligence story gained more strength recently due to a verbal argument over the possible dangers of artificial intelligence between Mark Zuckerberg, chief executive of Facebook and technology entrepreneur Elon Musk.

However, the Artificial intelligence story that has been reported tends to tell more regarding the cultural uncertainties as well as the representations of the machines that it does regarding the facts of this specific case. And the fact remains that robots tends to make for great villains on the big screen.

Though in the actual world that we tend to live in, artificial intelligence seems to be a big area of research at the moment and presently the systems that have been developed and verified seem to be gradually complex. One of the consequences of this is that it is frequently uncertain how neural networks tend to come to produce the output that they tend to do particularly when two are set up in order to interact with each other without the interference of human intervention as in the case of the Facebook experiment. Hence some have been debating that putting artificial intelligence in systems like the autonomous weapons seems to be risky.

Artificial Intelligence, Rapidly Progressing Field

It is also the reason why ethics for artificial intelligence seems to be a rapidly progressing field and the technology would be definitely touching our lives much more directly in the near future. However the system of Facebook has been utilised for research and not for public-facing applications.

It had been shut down since it had been doing something the team did not seem to be interested in reviewing, not because they believed that they had stumbled on an existential threat to mankind. It is also essential to be recall that in general, chatbots seem to be very difficult to develop.

Facebook, in fact had recently intended to limit the rollout of its Messenger chatbot platform when it had discovered that several of the bots on it had been unable to address the queries of its 70% users. Obviously, chatbots can be automated which can seem like humans and could even deceive us in some circumstances too. However, it is unlikely to think that they are also capable of plotting a rebellion and at least the one at Facebook would certainly not seem to be risky.